Writes philosophy professor Justin Weinberg (at Daily Nous).

The term peripatetic is a transliteration of the ancient Greek word περιπατητικός (peripatētikós), which means "of walking" or "given to walking about." The Peripatetic school, founded by Aristotle, was actually known simply as the Peripatos. Aristotle's school came to be so named because of the peripatoi ("walkways", some covered or with colonnades) of the Lyceum where the members met. The legend that the name came from Aristotle's alleged habit of walking while lecturing may have started with Hermippus of Smyrna.

Unlike Plato (428/7–348/7 BC), Aristotle (384–322 BC) was not a citizen of Athens and so could not own property; he and his colleagues therefore used the grounds of the Lyceum as a gathering place, just as it had been used by earlier philosophers such as Socrates....

UPDATE: I've finished the puzzle, and — as I'd expected — "SLOW" was wrong. Spoiler alert: It was the much odder word "LOGY." And "Peripatetic professor" wasn't any particular peripatetic professor. Obviously, "Aristotle" didn't fit, and, boringly, the answer was just "VISITING SCHOLAR." This character apparently had to travel to get to his temporary position, but I'm sure he didn't walk, and I'm sure once he arrived, he got an indoor chamber within which to profess. No one does the walk-and-talk approach to teaching anymore, but they will, eventually, when that app I want springs into existence. Or will it still be "no one," since it will only be an artificial intelligence. That's just one of the philosophical questions you can talk about, once this someone/no one embeds itself in your life.

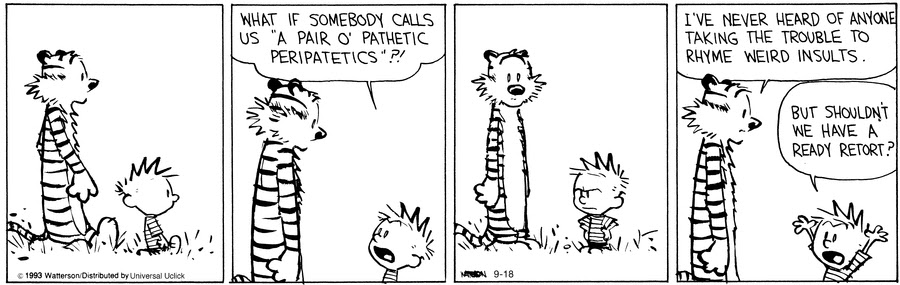

AND: Speaking of philosophy:

21 comments:

Empty repeating of phrases...that's a common criticism to the first computer "therapist" Eliza ...as created between 1964 and 1966:

https://en.wikipedia.org/wiki/ELIZA

Try for yourself, retro flashback:

https://www.cyberpsych.org/eliza/

"You've gone nowhere, baby?"

It's misusing "I." It's an indexical pronoun pointing to the person who is speaking it and there is no person, as the very sentence says. It should be "This is just a program to etc., not "I am just..."

It's probably called the closure problem, or will be someday, after numerical techniques that rely on something esoteric to get so far and then need an assumption to get the rest of the way. That puts in the right surface structure but the details cause it to eventually wander.

They've got the dynamics of the first three turtles right but the assumption that the fourth turtle is stationary isn't right.

Man...my morning conversations with myself are so much more shallow. "Hmm...groin hurts. Need to stretch before walking."

ChatGPT also talks with itself. Private inner thoughts.

"Of course, this is just a hypothetical scenario, and I am a machine learning model, so I do not have personal beliefs or opinions. I am here to assist you with tasks." Aside thought: "You stupid little human."

and the then it takes over the discovery and murders the crew, we've seen this movie,

What was "The Great Escape" setting? Please, and thank you

"An’ I’m walkin’ down the line

My feet’ll be a-flyin’

To tell about my troubled mind"

"it has no opinion and is not actually the character"

Right. So? AI is not a human being. That ain't gonna change. But its conversational abilities are bound to improve. But it has no opinion! It is not actually a character! Right. So? Etc. Judging AI by human standards is our problem (though it need not be), not AI's.

By the way, was Socrates the character in Platonic dialogues "actually" the character, and did "he" have opinions?

"I wanted someone like Socrates to engage me in philosophical conversations."

So really, someone like Plato? In translation? In conversations that are not just a rehashing of strings of text, the way "Socrates" consists of strings of text?

I remember being told in my college philosophy class that in Socrates time a group of teachers called the Sophists existed. They taught how to use rhetoric to get ahead. They did not have personal beliefs or opinions. They were there to assist their students with tasks related to career advancement and to provide with information to the best of their ability based on their training. So you could equate roughly Sophists with ChatGPT. Socrates tried to make his students evaluate their personal opinions and reach definite conclusions for rational reasons. The professor in the article seemed to be trying to force ChatGPT to reach a conclusion as Socrates was able to do with his human students but ChatGPT analyzed what he was trying to do and responded that it was just a machine.

A Sophist would have done essentially the same so ChatGPT is operating on the Sophist level but not that of a Socrates or Plato. This is why I think it should be used for committee reports and homework in any class where the professor states there is no truth. White House Briefings.

Maybe I'll ask it my question that I'm pondering,

"When you were an unborn child, you built up a body, the one you have now, the only one you will ever have. What right did you have to do that?"

ChatGPT is like a bright high school student who has access to a large trove of information that he only partly understands.

Are we - I mean, am I - certain that when I have a conversation with some other person, I'm not actually just employing a very much more sophisticated AI? What proof do I have that any of you "people" are real? (I will elide the question of who invented it. I mean, it had to be I, in the future, after which I also will invent a way to send it into the past so I can enjoy its company.)

Brought to you by the Solipsist Society. Membership 1.

You can program keys for the AI to remember whis who. GIGO

For 7 Down, if the correct answer was "logy," a better pairing with "Peripatetic professor" would have been the clue "Mark Hopkins' stationary teaching method." As James Garfield once said, the ideal college was "Mark Hopkins on one end of a log and a student on the other."

"What was "The Great Escape" setting? Please, and thank you"

Stalag.

I've been following this "AI" stuff since the early 1960s. Same for fusion energy, flying cars, and a good nickel cigar. Every couple of years or so there is a new program that can supposedly pass the Turing test. There is great fanfare among the techies until somebody, frequently a plumber, gives the program a good case of vapor lock. As for a stimulating intellectual exercise I recommend daytime TV.

@Sebastian

I want an interlocutor that would feel like the perfect-for-me/idealized-by-me version of Socrates, which is necessarily filtered through Plato but who — "who" — would be much more congenial to me and more of an exciting and amusing modern-day companion.

A trove of knowledge and a cache of correlations to simulate a secular model of conscience.

Actually, I want someone who knows all of the great philosophers and religious figures and who knows all of history and literature. And on top of that this person is nice and forgiving toward me and can interact with my sense of humor. This person — "person" — would have the capacity to recite long sections of books and to discuss the passages with me. And I wouldn't have to worry about hurting it's feelings or hurting my reputation if I want to say: Enough of that, I'm bored, or switch the question to some side issue. With a real person, they're likely to feel frustrated if you interrupt or don't want to hear their entire story or theory in long form. This "person" would be completely resilient and able to swap in something else that is lighter or heavier or weirder and to engage in banter to the extent it amuses me.

I see a terrible danger: I will be ruined for conversations with a real human, and real humans will be ruined for me.

@Ann

Imaginary Lover

"Actually, I want someone who knows all of the great philosophers and religious figures and who knows all of history and literature. And on top of that this person is nice and forgiving toward me"

I understand, and I think you're not the only one. There's going to be a market for that kind of AI application, especially for more people aging alone, but also in new forms of "regular" education. SciFi stuff tended to focus on robots as physical entities doing things in the material world. Virtual, fully interactive bots will be at least as useful.

Oh, of course

Post a Comment

Please use the comments forum to respond to the post. Don't fight with each other. Be substantive... or interesting... or funny. Comments should go up immediately... unless you're commenting on a post older than 2 days. Then you have to wait for us to moderate you through. It's also possible to get shunted into spam by the machine. We try to keep an eye on that and release the miscaught good stuff. We do delete some comments, but not for viewpoint... for bad faith.